graph LR

Agent --"takes *action*"--> Environment

Environment --"observes *state*, *reward*"--> Agent

2 Markov Decision Processes

2.1 Introduction

Machine learning studies algorithms that learn to solve a task “on their own”, without needing a programmer to implement handwritten “if statements”. Reinforcement learning (RL) is a branch of machine learning that focuses on decision problems like the following:

Example 2.1 (Decision problems)

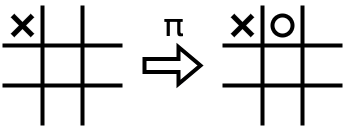

All of these tasks involve taking a sequence of actions in order to achieve some goal. This interaction loop can be summarized in the following diagram:

In this chapter, we’ll investigate the most popular mathematical formalization for such tasks: Markov decision processes (MDPs). We will study dynamic programming (DP) algorithms for solving tasks when the rules of the environment are totally known. We’ll describe how to evaluate different policies and how to compute (or approximate) the optimal policy for a given MDP. We’ll introduce the Bellman consistency condition, which allows us to analyze the whole sequence of interactions in terms of individual timesteps.

Remark 2.1 (Further generalizations). In most real tasks, we don’t explicitly know the rules of the environment, or can’t concisely represent them on a computer. We will deal with this in future chapters; this chapter assumes the environment is known and accessible, so there’s nothing to learn about the environment.

Additionally, in many tasks, only a subset of the state is visible to the observer. Such partially observed environments are out of scope of this textbook; see Section 2.6 for additional resources.

Code

from utils import NamedTuple, Float, Array, partial, jax, jnp, latexify, latex

from tabulate import tabulate

import numpy as np

from IPython.display import Markdown, display2.2 Introduction to Markov decision processes

To gain a better grasp of decision processes and identify the key features that we want to formalize, let us frame the robotic control task in ex. 2.1 as a decision problem.

Example 2.2 (Robotic control as a decision problem) Suppose the goal is to move a robot to a certain position.

- The state consists of the positions and velocities of the robot’s joints.

- The action consists of the forces to apply to the robot’s motors.

- The state transitions are essentially the rules of physics: after applying a certain force to the joints, their positions and velocities would change according to physical law.

- We reward positions that are closer to the desired position. To be more energy-efficient, we could also deduct reward for applying a lot of force, or add other terms that describe the ideal behaviour.

Exercise 2.1 (Identifying decision problems) For each of the other examples in ex. 2.1, what information should the state include? What might the valid set of actions be? Describe the state transitions heuristically, and a reward function that describes how the task should be solved.

In many sequential decision-making problems, the state transitions only depend on the current state and action. For example, in ex. 2.2, if we use Newton’s laws of physics to compute the state transitions, then just knowing the current positions and velocities is enough to calculate the next positions and velocities, since Newton’s laws are second-order. We say that such state transitions satisfy the Markov property.

Definition 2.1 (Markov property (informal)) An environment’s state transitions satisfy the Markov property if “what happens next” only depends on the current state of the environment and not on the history of how we got here.

We will formally define the Markov property in def. 2.5 after introducing some notation for describing MDPs.

Exercise 2.2 (Checking for the Markov property) Look back at the state transitions you described in Exercise 2.1. Do they satisfy the Markov property? (For chess, consider the threefold repetition rule: if the same position occurs three times during the game, either player may claim a draw.)

Sequential decision-making problems that satisfy the Markov property are called Markov decision processes (MDPs). MDPs are the core setting of modern RL research. We can further classify MDPs based on whether or not the task eventually ends.

Definition 2.2 (Episodic tasks) Tasks that have a well-defined start and end, such as a game of chess or a football match, are called episodic. Each episode starts afresh and ends once the environment reaches a terminal state. The number of steps in an episode is called its horizon.

Definition 2.3 (Continuing tasks) On the other hand, continuing tasks, such as managing a business’s inventory, have no clear start or end point. The agent interacts with the environment in an indefinite loop.

Consider an episodic task where the horizon is fixed and known in advance. That is, the agent takes up to \(H\) actions, where \(H\) is some finite positive integer. We model such tasks with a finite-horizon MDP. Otherwise, if there’s no limit to how long the episode can continue or if the task is continuing, we model the task with an infinite-horizon MDP. We’ll begin with the finite-horizon case in Section 2.3 and discuss the infinite-horizon case in Section 2.4.

2.3 Finite-horizon Markov decision processes

Many real-world tasks, such as most sports games or video games, end after a fixed number of actions from the agent. In each episode:

- The environment starts in some initial state \(s_0\).

- The agent observes the state and takes an action \(a_0\).

- The environment updates to state \(s_1\) according to the action.

Steps 2 and 3 are repeated \(H\) times, resulting in a sequence of states and actions \(s_0, a_0, \dots, s_{H-1}, a_{H-1}, s_H\), where \(s_H\) is some terminal state. Here’s the notation we will use to describe an MDP:

Definition 2.4 (Finite-horizon Markov decision process) The components of a finite-horizon Markov decision process are:

The state that the agent interacts with. We use \(s_h\) to denote the state at time \(h\). \(\mathcal{S}\) denotes the set of possible states, called the state space.

The actions that the agent can take. We use \(a_h\) to denote the action at time \(h\). \(\mathcal{A}\) denotes the set of possible actions, called the action space.

An initial state distribution \(P_0 \in \triangle(\mathcal{S})\).

The state transitions (a.k.a. dynamics) \(P : \mathcal{S} \times \mathcal{A} \to \triangle(\mathcal{S})\) that describe what state the agent transitions to after taking an action. We write \(P(s_{h+ 1} \mid s_h, a_h)\) for the probability of transitioning to state \(s_{h+1}\) when starting in state \(s_h\) and taking action \(a_h\). Note that we use the letter \(P\) for the state transition function and the symbol \(\mathbb{P}\) more generally to indicate probabilities of events.

The reward function. In this course, we’ll take it to be a deterministic function on state-action pairs, \(r : \mathcal{S} \times \mathcal{A} \to \mathbb{R}\), but many results will extend to a stochastic reward signal. We will use \(r_{h} := r(s_h, a_h)\) to denote the reward obtained at time \(h\).

A time horizon \(H\in \mathbb{N}\) that specifies the maximum number of interactions in a trajectory, that is, a single sequence of states, actions, and rewards:

\[ (s_0, a_0, r_0, \dots, s_{H-1}, a_{H-1}, r_{H-1}). \tag{2.1}\]

(Some sources omit the action and reward at the final time step.)

Combined together, these objects specify a finite-horizon Markov decision process:

\[ \mathcal{M} = (\mathcal{S}, \mathcal{A}, P_0, P, r, H). \tag{2.2}\]

When there are finitely many states and actions, i.e. \(|\mathcal{S}|, |\mathcal{A}| < \infty\), we can express the relevant quantities as vectors and matrices (i.e. tables of values):

\[ \begin{aligned} P_0 &\in [0, 1]^{|\mathcal{S}|} & P &\in [0, 1]^{(|\mathcal{S} \times \mathcal{A}|) \times |\mathcal{S}|} & r &\in \mathbb{R}^{|\mathcal{S}| \times |\mathcal{A}|} \end{aligned} \]

(Verify that the types and shapes provided above make sense!)

Code

class MDP(NamedTuple):

"""A description of a Markov decision process with finitely many states and actions."""

S: int # number of states

A: int # number of actions

mu: Float[Array, " S"]

P: Float[Array, "S A S"] # "current" state, "current" action, "next" state

r: Float[Array, "S A"]

H: int # the horizon

gamma: float = 1.0 # discount factor (used later)

class Transition(NamedTuple):

"""A single state-action-reward interaction with the environment.

A trajectory consists of a sequence of transitions.

"""

s: int

a: int

r: floatDefinition 2.5 (Markov property) A decision process satisfies the Markov property if the next state is independent from the past states and actions when conditioned on the current state and action:

\[ \mathbb{P}(s_{h+1} \mid s_0, a_0, \dots, s_h, a_h) = \mathbb{P}(s_{h+1} \mid s_h, a_h) \]

By their definition, Markov decision processes satisfy the Markov property. This is because the state transitions are defined using the function \(P\), which only takes in a single state-action pair to transition from.

Example 2.3 (Tidying MDP) Let’s consider a simple decision problem throughout this chapter: the task of keeping your room tidy.

Your room has the possible states \(\mathcal{S} = \{ \text{orderly}, \text{messy} \}.\) You can take either of the actions \(\mathcal{A} = \{ \text{ignore}, \text{tidy} \}.\) The room starts off orderly, that is, \(P_0(\text{orderly}) = 1\).

The state transitions are as follows: if you tidy the room, it becomes (or remains) orderly; if you ignore the room, it might become messy, according to the probabilities in tbl. 2.1.

The rewards are as follows: You get penalized for tidying an orderly room (a waste of time) or ignoring a messy room, but you get rewarded for ignoring an orderly room (since you can enjoy your additional time). Tidying a messy room is a chore that gives no reward.

Consider a time horizon of \(H= 7\) days (one interaction per day). Let \(t = 0\) correspond to Monday and \(t = 6\) correspond to Sunday.

Code

tidy_mdp = MDP(

S=2, # 0 = orderly, 1 = messy

A=2, # 0 = ignore, 1 = tidy

mu=jnp.array([1.0, 0.0]), # start in orderly state

P=jnp.array([

[

[0.7, 0.3], # orderly, ignore

[1.0, 0.0], # orderly, tidy

],

[

[0.0, 1.0], # messy, ignore

[1.0, 0.0], # messy, tidy

],

]),

r=jnp.array([

[

1.0, # orderly, ignore

-1.0, # orderly, tidy

],

[

-1.0, # messy, ignore

0.0, # messy, tidy

]

]),

H=7,

)

Markdown(tabulate(

zip(*[

['orderly', 'orderly', 'messy', 'messy'],

['ignore', 'tidy', 'ignore', 'tidy'],

tidy_mdp.P[:, :, 0].flatten(),

tidy_mdp.P[:, :, 1].flatten(),

tidy_mdp.r.flatten(),

]),

[

r"$s$",

r"$a$",

r"$P(\text{orderly} \mid s, a)$",

r"$P(\text{messy} \mid s, a)$",

r"$r(s, a)$",

]

))| \(s\) | \(a\) | \(P(\text{orderly} \mid s, a)\) | \(P(\text{messy} \mid s, a)\) | \(r(s, a)\) |

|---|---|---|---|---|

| orderly | ignore | 0.7 | 0.3 | 1 |

| orderly | tidy | 1 | 0 | -1 |

| messy | ignore | 0 | 1 | -1 |

| messy | tidy | 1 | 0 | 0 |

We’ll now introduce several core concepts when working with MDPs.

Definition 2.6 (Policies) A policy \(\pi\) describes the agent’s strategy: which actions it takes in a given situation. A key goal of RL is to find the optimal policy that maximizes the total reward on average.

There are three axes along which policies can vary: their outputs, inputs, and time-dependence.

- Outputs: Deterministic or stochastic. A deterministic policy outputs actions while a stochastic policy outputs distributions over actions.

Inputs: history-independent or history-dependent. A history-independent (a.k.a. “Markovian”) policy only depends on the current state, while a history-dependent policy depends on the sequence of past states, actions, and rewards. We’ll only consider history-independent policies in this course.

Stationary or time-dependent. A stationary (a.k.a. time-homogeneous) policy keeps the same strategy at all time steps, while a time-dependent policy can depend on the current timestep. For consistency with states and actions, we will denote the timestep as a subscript, i.e. \(\pi = \{ \pi_0, \dots, \pi_{H-1} \}.\)

Note that for finite state and action spaces, we can represent a randomized mapping \(\mathcal{S} \to \triangle(\mathcal{A})\) as a matrix \(\pi \in [0, 1]^{\mathcal{S} \times \mathcal{A}}\) where each row describes the policy’s distribution over actions for the corresponding state.

Example 2.4 (Policies for the tidying MDP) Here are some possible deterministic policies for the tidying MDP ex. 2.3:

- Always tidy: \(\pi(s) = \text{tidy}\).

- Only tidy on weekends: \(\pi_h(s) = \text{tidy}\) if \(h\in \{ 5, 6 \}\) and \(\pi_h(s) = \text{ignore}\) otherwise.

- Only tidy if the room is messy: \(\pi_h(\text{messy}) = \text{tidy}\) and \(\pi_h(\text{orderly}) = \text{ignore}\) for all \(h\).

Code

# arrays of shape (H, S, A) represent time-dependent policies

tidy_policy_always_tidy = (

jnp.zeros((7, 2, 2))

.at[:, :, 1].set(1.0)

)

tidy_policy_weekends = (

jnp.zeros((7, 2, 2))

.at[5:7, :, 1].set(1.0)

.at[0:5, :, 0].set(1.0)

)

tidy_policy_messy_only = (

jnp.zeros((7, 2, 2))

.at[:, 1, 1].set(1.0)

.at[:, 0, 0].set(1.0)

)Exercise 2.3 (Conditional independence for future states) Suppose actions are chosen according to a history-independent policy. Use the chain rule of probability to show that, conditioned on the current state and action \(s_h, a_h\), all future states \(s_{h'}\) where \(h' > h\) are conditionally independent of the past states and actions. That is, for all \(h' > h\),

\[ \mathbb{P}(s_{h'} \mid s_0, a_0, \dots, s_h, a_h) = \mathbb{P}(s_{h'} \mid s_h, a_h). \]

Definition 2.7 (Trajectory distribution) Once we’ve chosen a policy, we can sample trajectories by repeatedly choosing actions according to the policy and observing the rewards and updated states returned by the environment. This generative process induces a distribution \(\rho^{\pi}\) over trajectories. (We assume that \(P_0\) and \(P\) are clear from context.)

Example 2.5 (Trajectories in the tidying environment) Here is a possible trajectory for the tidying example:

| \(h\) | \(0\) | \(1\) | \(2\) | \(3\) | \(4\) | \(5\) | \(6\) |

|---|---|---|---|---|---|---|---|

| \(s\) | orderly | orderly | orderly | messy | messy | orderly | orderly |

| \(a\) | tidy | ignore | ignore | ignore | tidy | ignore | ignore |

| \(r\) | \(-1\) | \(1\) | \(1\) | \(-1\) | \(0\) | \(1\) | \(1\) |

Could any of the policies in ex. 2.4 have generated this trajectory?

Note that for a history-independent policy, using the Markov property (def. 2.5), we can write down the likelihood function of this probability distribution in an autoregressive way (i.e. one timestep at a time):

Theorem 2.1 (Trajectory distributions of history-independent policies) Let \(\pi\) be a history-independent policy. Then its trajectory distribution \(\rho^\pi\) (def. 2.7) over sequences of actions and states \((s_0, a_0, \dots, s_{H-1}, a_{H-1})\) can be written as

\[ \rho^{\pi}(\tau) := P_0(s_0) \pi_0(a_0 \mid s_0) P(s_1 \mid s_0, a_0) \cdots P(s_{H-1} \mid s_{H-2}, a_{H-2}) \pi_{H-1}(a_{H-1} \mid s_{H-1}). \tag{2.3}\]

Note that we use a deterministic reward function, so we only consider randomness over the states and actions.

Exercise 2.4 (Trajectory distribution for stochastic reward) Modify eq. 2.3 for the case when the reward function is stochastic, that is, \(r : \mathcal{S} \times \mathcal{A} \to \triangle(\mathbb{R})\).

graph LR

S0($$s_0$$) -- $$\pi_0$$ --> A0{{$$a_0$$}}

S0 & A0 --> R0[$$r_0$$]

A0 & S0 --> S1($$s_1$$)

S1 -- $$\pi_1$$ --> A1{{$$a_1$$}}

S1 & A1 --> R1[$$r_1$$]

A1 & S1 --> S2($$s_2$$)

S2 -- $$\pi_2$$ --> A2{{$$a_2$$}}

S2 & A2 --> R2[$$r_2$$]

A2 & S2 --> S3($$...$$)

class S0,S1,S2,S3 state

class A0,A1,A2 action

class R0,R1,R2 reward

classDef state fill: lightgreen;

classDef action fill: lightyellow;

classDef reward fill: lightblue;

Code

def trajectory_log_likelihood(

mdp: MDP,

traj: list[Transition],

pi: Float[Array, "S A"],

) -> float:

"""Compute the log-likelihood of a trajectory under a given MDP and policy."""

# initial distribution and action

total = jnp.log(mdp.mu[traj[0].s])

total += jnp.log(pi[traj[0].s, traj[0].a])

# remaining state transitions and actions

for i in range(1, mdp.H):

total += jnp.log(mdp.P[traj[i - 1].s, traj[i - 1].a, traj[i].s])

total += jnp.log(pi[traj[i].s, traj[i].a])

return totalFor a deterministic policy \(\pi\), we have that \(\pi_h(a \mid s) = \mathbf{1}\left\{a = \pi_h(s)\right\}\); that is, the probability of taking an action is \(1\) if it’s the unique action prescribed by the policy for that state and \(0\) otherwise. In this case, the only randomness in sampling trajectories comes from the initial state distribution \(P_0\) and the state transitions \(P\).

2.3.1 Policy evaluation

Recall that the core goal of an RL algorithm is to find a policy that maximizes the expected total reward

\[ \mathop{\mathbb{E}}[r_0 + \cdots + r_{H-1}], \tag{2.4}\]

where \(r_h= r(s_h, a_h)\). Note that the quantity \(r_0 + \cdots + r_{H-1}\) is a random variable whose distribution depends on the policy’s trajectory distribution \(\rho^\pi\) (def. 2.7). We need tools for describing the expected total reward achieved by a given policy, starting in specific states and actions. This will allow us to compare the quality of different policies and compute the optimal policy.

Definition 2.8 (Value function) Let \(\pi\) be a history-independent policy. Its value function at time \(h\) returns the expected remaining reward when starting in a specific state:

\[ V_h^\pi(s) := \mathop{\mathbb{E}}_{\tau \sim \rho^\pi} [r_h+ \cdots + r_{H-1} \mid s_h= s] \tag{2.5}\]

Definition 2.9 (Action-value function) Similarly, a history-independent policy \(\pi\)’s action-value function (aka Q function) at time \(h\) returns its expected remaining reward, starting in a specific state and taking a specific action:

\[ Q_h^\pi(s, a) := \mathop{\mathbb{E}}_{\tau \sim \rho^\pi} [r_h+ \cdots + r_{H-1} \mid s_h= s, a_h= a]. \tag{2.6}\]

graph LR

S0($$s_h = s$$) -- $$\pi_h$$ --> A0{{$$a_h$$}}

S0 & A0 --> R0[$$r_h$$]

A0 & S0 --> S1("$$s_{h+1}$$")

S1 -- "$$\pi_{h+1}$$" --> A1{{"$$a_{h+1}$$"}}

S1 & A1 --> R1["$$r_{h+1}$$"]

A1 & S1 --> S2("$$s_{h+2}$$")

S2 -- "$$\pi_{h+2}$$" --> A2{{"$$a_{h+2}$$"}}

S2 & A2 --> R2["$$r_{h+2}$$"]

A2 & S2 --> S3("...")

class S0,R0,R1,R2 thick

classDef thick stroke-width: 4px;

class S0,S1,S2,S3 state

class A0,A1,A2 action

class R0,R1,R2 reward

classDef state fill: lightgreen;

classDef action fill: lightyellow;

classDef reward fill: lightblue;

graph LR

S0($$s_h = s$$) ~~~ A0{{$$a_h = a$$}}

S0 & A0 --> R0[$$r_h$$]

A0 & S0 --> S1("$$s_{h+1}$$")

S1 -- "$$\pi_{h+1}$$" --> A1{{"$$a_{h+1}$$"}}

S1 & A1 --> R1["$$r_{h+1}$$"]

A1 & S1 --> S2("$$s_{h+2}$$")

S2 -- "$$\pi_{h+2}$$" --> A2{{"$$a_{h+2}$$"}}

S2 & A2 --> R2["$$r_{h+2}$$"]

A2 & S2 --> S3("...")

class S0,A0,R0,R1,R2 thick;

classDef thick stroke-width: 4px;

class S0,S1,S2,S3 state

class A0,A1,A2 action

class R0,R1,R2 reward

classDef state fill: lightgreen;

classDef action fill: lightyellow;

classDef reward fill: lightblue;

These two functions define each other in the following way:

Theorem 2.2 (Relating \(V^\pi\) and \(Q^\pi\)) Let \(\pi\) be a history-independent policy. The value of a state is the expected action-value in that state over actions drawn from the policy:

\[ V_h^\pi(s) = \mathop{\mathbb{E}}_{a \sim \pi_h(\cdot \mid s)} [Q_h^\pi(s, a)]. \tag{2.7}\]

The value of a state-action pair is the sum of the immediate reward and the expected value of the following state:

\[ Q_h^\pi(s, a) = r(s, a) + \mathop{\mathbb{E}}_{s' \sim P(s, a)} [V_{h+1}^\pi(s')]. \tag{2.8}\]

Exercise 2.5 (Proof of relationship between \(V^\pi\) and \(Q^\pi\)) Use the law of iterated expectations to show eq. 2.7. Now apply linearity of expectation to show eq. 2.8. Where did you use the assumption that \(\pi\) doesn’t depend on the past states and actions?

Remark 2.2 (Computing \(Q^\pi\) from \(V^\pi\) requires environment). Note that all you need to compute \(V_h^\pi\) from \(Q_h^\pi\) (eq. 2.7) is knowledge of the policy \(\pi\). On the other hand, to compute \(Q_h^\pi\) from \(V_{h+1}^\pi\) (eq. 2.8), you need knowledge of the reward function and state transitions to look ahead one step. In later chapters, we’ll study problems where the environment is unknown, making it more useful to approximate \(Q_h^\pi\) than \(V_h^\pi\).

Code

def q_to_v(

policy: Float[Array, "S A"],

q: Float[Array, "S A"],

) -> Float[Array, " S"]:

"""

Compute the value function for a given policy in a known finite MDP

at a single timestep from its action-value function.

"""

return jnp.average(q, weights=policy, axis=1)

def v_to_q(

mdp: MDP,

v_next: Float[Array, " S"],

) -> Float[Array, "S A"]:

"""

Compute the action-value function in a known finite MDP

at a single timestep from the corresponding value function.

"""

# the discount factor is relevant later

return mdp.r + mdp.gamma * mdp.P @ v_next

# convert a list of v functions to a list of q functions

v_ary_to_q_ary = jax.vmap(v_to_q, in_axes=(None, 0))Putting eq. 2.7 and eq. 2.8 together reveals the Bellman consistency equations. By simply considering the cumulative reward as the sum of the immediate reward and the remaining reward, we can describe the value function in terms of itself. The resulting system of equations is named after Richard Bellman (1920–1984), who is credited with introducing dynamic programming in 1953.

Theorem 2.3 (Bellman consistency equations) For a history-independent policy \(\pi\),

\[ V_h^\pi(s) = \mathop{\mathbb{E}}_{\substack{a \sim \pi_h(s) \\ s' \sim P(s, a)}} [r(s, a) + V_{h+1}^\pi(s')]. \tag{2.9}\]

The proof is left as Exercise 2.5. This is a system of \(H |\mathcal{S}|\) equations (one equation per state per timestep) in \(H |\mathcal{S}|\) unknowns (the values \(V_h^\pi(s)\)), so \(V^\pi\) is the unique solution, as long as no two states are exactly identical to each other.

Code

def check_bellman_consistency_v(

mdp: MDP,

policy: Float[Array, "H S A"],

v_ary: Float[Array, "H S"],

) -> bool:

"""

Check that the given (time-dependent) "value function"

satisfies the Bellman consistency equation.

"""

return all(

jnp.allclose(

# lhs

v_ary[h],

# rhs

jnp.sum(policy[h] * (mdp.r + mdp.gamma * mdp.P @ v_ary[h + 1]), axis=1),

)

for h in range(mdp.H - 1)

)Remark 2.3 (The Bellman consistency equation for deterministic policies). Note that for history-independent deterministic policies, the Bellman consistency equations simplify to

\[ V_h^\pi(s) = r(s, \pi_h(s)) + \mathop{\mathbb{E}}_{s' \sim P(s, \pi_h(s))} [V_{h+1}^\pi(s')]. \tag{2.10}\]

How can we actually evaluate a given policy, that is, compute its value function?

Suppose we start with some guess \(v_h: \mathcal{S} \to \mathbb{R}\) for the state values at time \(h= 0, \dots, H-1\). We write \(v\) in lowercase to indicate that it might not be an actual value function of a policy. How might we improve this guess?

Recall that the Bellman consistency equations (eq. 2.9) hold for the value functions of history-independent policies. So if \(v\) is close to the target \(V^\pi\), it should nearly satisfy the system of equations associated with \(\pi\). With this in mind, suppose we replace \(V^\pi_{h+1}\) with \(v_{h+1}\) on the right-hand side. Then the new right-hand side quantity,

\[ \mathop{\mathbb{E}}_{\substack{a \sim \pi_h(s) \\ s' \sim P(s, a)}} [r(s, a) + v_{h+1}(s')], \tag{2.11}\]

can be thought of as follows: we take one action according to \(\pi\), observe the immediate reward, and evaluate the next state using \(v\). This is illustrated in fig. 2.6 below. This operation gives us an updated estimate of the value of \(V^\pi_h(s)\) that is at least as accurate as applying \(v(s)\) directly.

graph LR

S0($$s$$) -- $$\pi_h$$ --> A0{{"$$a$$"}}

S0 & A0 --> R0["$$r_h = r(s, a)$$"]

A0 & S0 --> S1("$$s'$$")

S1 -- "$$v_{h+1}$$" --> R1["$$r_{h+1} + \cdots + r_{H-1} \approx v_{h+1}(s')$$"]

class R0,R1 thick

classDef thick stroke-width: 4px;

class S0,S1 state

class A0 action

class R0,R1 reward

classDef state fill: lightgreen;

classDef action fill: lightyellow;

classDef reward fill: lightblue;

We can treat this operation of taking \(v_h\) to its improved version in eq. 2.11 as a higher-order function known as the Bellman operator.

Definition 2.10 (Bellman operator) Let \(\pi : \mathcal{S} \to \triangle(\mathcal{A})\). the Bellman operator \(\mathcal{J}^{\pi} : (\mathcal{S} \to \mathbb{R}) \to (\mathcal{S} \to \mathbb{R})\) is the higher-order function that takes in a function \(v : \mathcal{S} \to \mathbb{R}\) and returns the r.h.s. of the Bellman equation with \(v\) substituted in:

\[ \mathcal{J}^{\pi}(v) := \left( s \mapsto \mathop{\mathbb{E}}_{\substack{a \sim \pi(s) \\ s' \sim P(s, a)}} [r(s, a) + v(s')] \right). \tag{2.12}\]

The Bellman operator is a crucial tool for reasoning about MDPs. Intuitively, it answers the following question: if we evaluate the next state using \(v\), how good is the current state, if we take a single action from the given policy?

Code

def bellman_operator_looping(

mdp: MDP,

policy: Float[Array, "S A"],

v: Float[Array, " S"],

) -> Float[Array, " S"]:

"""

Looping definition of the Bellman operator.

Concise version is below

"""

v_new = jnp.zeros((mdp.S,))

for s in range(mdp.S):

for a in range(mdp.A):

for s_next in range(mdp.S):

v_new[s] += (

policy[s, a]

* mdp.P[s, a, s_next]

* (mdp.r[s, a] + mdp.gamma * v[s_next])

)

return v_new

# Note that we can concisely implement this using the `q_to_v` and `v_to_q` utilities from above:

def bellman_operator(

mdp: MDP,

policy: Float[Array, "S A"],

v: Float[Array, " S"],

) -> Float[Array, " S"]:

"""For a known finite MDP, the Bellman operator can be exactly evaluated."""

return q_to_v(policy, v_to_q(mdp, v)) # equivalent

return jnp.sum(policy * (mdp.r + mdp.gamma * mdp.P @ v), axis=1)

latex(

bellman_operator_looping,

id_to_latex={"v_new": r"v^\text{lhs}", "policy": r"\pi", "s_next": r"s'"},

trim_prefixes={"mdp"},

subscript_type="call",

)$

The Bellman operator also gives us a concise way to express the Bellman consistency equations (eq. 2.9) for the value function:

\[ V_h^\pi = \mathcal{J}^{\pi_h}(V_{h+1}^\pi) \tag{2.13}\]

The Bellman consistency equations (eq. 2.9) give us a convenient algorithm for evaluating stationary policies: it expresses the value function at timestep \(h\) as a function of the value function at timestep \(h+1\). This means we can start at the end of the time horizon, where the value is known, and work backwards in time, using the Bellman operator to compute the value function at each time step.

Code

def dp_eval_finite(mdp: MDP, policy: Float[Array, "S A"]) -> Float[Array, "H S"]:

"""Evaluate a policy using dynamic programming."""

V = np.zeros((mdp.H + 1, mdp.S)) # initialize to 0 at end of time horizon

for h in range(mdp.H - 1, -1, -1):

V[h] = bellman_operator(mdp, policy[h], V[h + 1])

return V[:-1]

latex(

dp_eval_finite,

id_to_latex={"bellman_operator": r"\mathcal{J}", "policy": r"\pi", "V": "V^\pi"},

)$

This runs in time \(O(H \cdot |\mathcal{S}|^2 \cdot |\mathcal{A}|)\). Note that the implementation of the Bellman operator in fig. 2.7 can be easily modified to compute \(Q^\pi\) as an intermediate step. Do you see how?

Example 2.6 (Tidying policy evaluation) Let’s evaluate the policy from ex. 2.4 in the tidying MDP that tidies if and only if the room is messy. We’ll use the Bellman consistency equation to compute the value function at each time step.

\[ \begin{aligned} V_{H-1}^\pi(\text{orderly}) &= r(\text{orderly}, \text{ignore}) \\ &= 1 \\ V_{H-1}^\pi(\text{messy}) &= r(\text{messy}, \text{tidy}) \\ &= 0 \\ V_{H-2}^\pi(\text{orderly}) &= r(\text{orderly}, \text{ignore}) + \mathop{\mathbb{E}}_{s' \sim P(\text{orderly}, \text{ignore})} [V_{H-1}^\pi(s')] \\ &= 1 + 0.7 \cdot V_{H-1}^{\pi}(\text{orderly}) + 0.3 \cdot V_{H-1}^{\pi}(\text{messy}) \\ &= 1 + 0.7 \cdot 1 + 0.3 \cdot 0 \\ &= 1.7 \\ V_{H-2}^\pi(\text{messy}) &= r(\text{messy}, \text{tidy}) + \mathop{\mathbb{E}}_{s' \sim P(\text{messy}, \text{tidy})} [V_{H-1}^\pi(s')] \\ &= 0 + 1 \cdot V_{H-1}^{\pi}(\text{orderly}) + 0 \cdot V_{H-1}^{\pi}(\text{messy}) \\ &= 1 \\ V_{H-3}^\pi(\text{orderly}) &= r(\text{orderly}, \text{ignore}) + \mathop{\mathbb{E}}_{s' \sim P(\text{orderly}, \text{ignore})} [V_{H-2}^\pi(s')] \\ &= 1 + 0.7 \cdot V_{H-2}^{\pi}(\text{orderly}) + 0.3 \cdot V_{H-2}^{\pi}(\text{messy}) \\ &= 1 + 0.7 \cdot 1.7 + 0.3 \cdot 1 \\ &= 2.49 \\ V_{H-3}^\pi(\text{messy}) &= r(\text{messy}, \text{tidy}) + \mathop{\mathbb{E}}_{s' \sim P(\text{messy}, \text{tidy})} [V_{H-2}^\pi(s')] \\ &= 0 + 1 \cdot V_{H-2}^{\pi}(\text{orderly}) + 0 \cdot V_{H-2}^{\pi}(\text{messy}) \\ &= 1.7 \end{aligned} \]

etc. You may wish to repeat this computation for the other policies to get a better sense of this algorithm.

Code

V_messy = dp_eval_finite(tidy_mdp, tidy_policy_messy_only)

Markdown(tabulate(

zip(*[

["orderly", "messy"],

*V_messy,

]),

["$s$"] + [f"$h={h}$" for h in range(len(V_messy))]

))| \(s\) | \(h=0\) | \(h=1\) | \(h=2\) | \(h=3\) | \(h=4\) | \(h=5\) | \(h=6\) |

|---|---|---|---|---|---|---|---|

| orderly | 5.56217 | 4.79277 | 4.0241 | 3.253 | 2.49 | 1.7 | 1 |

| messy | 4.79277 | 4.0241 | 3.253 | 2.49 | 1.7 | 1 | 0 |

2.3.2 Optimality

We’ve just seen how to evaluate a given policy. But how can we find the best policy for a given environment? We must first define what it means for a policy to be optimal. We’ll investigate optimality for history-independent policies. In Theorem 2.6, we’ll see that constraining ourselves to history-independent policies isn’t actually a constraint: the best history-independent policy performs at least as well as the best history-dependent policy, in all scenarios!

Remark 2.4 (Intuition). How is this possible? Recall the Markov property (def. 2.5), which says that once we know the current state, the next state becomes independent from the past history. This means that history-dependent policies, intuitively speaking, don’t benefit over simply history-independent ones in MDPs.

Definition 2.11 (Optimal policies) Let \(\pi^\star\) be a history-independent policy. We say that \(\pi^\star\) is optimal if \(V_h^{\pi^\star}(s) \ge V_h^{\pi}(s)\) for any history-independent policy \(\pi\) at any time \(h\in [H]\). In other words, an optimal history-independent policy achieves the highest possible expected remaining reward in every state at every time.

Example 2.7 (Optimal policy in the tidying MDP) For the tidying MDP (ex. 2.3), you might guess that the optimal strategy is

\[ \pi(s) = \begin{cases} \text{tidy} & s = \text{messy} \\ \text{ignore} & \text{otherwise} \end{cases} \tag{2.14}\]

since this keeps the room in the “orderly” state in which reward can be obtained.

Definition 2.12 (Optimal value function) Given a state \(s\) at time \(h\), the optimal value \(V^\star_h(s)\) is the maximum expected remaining reward achievable by any history-independent policy \(\pi\):

\[ V^\star_h(s) := \max_{\pi} V^{\pi}_h(s). \tag{2.15}\]

The optimal action-value function is defined analogously:

\[ Q^\star_h(s, a) := \max_{\pi} Q^{\pi}_h(s, a). \tag{2.16}\]

These satisfy the following relationship (cf Theorem 2.2):

Theorem 2.4 (Relating \(V^\star\) and \(Q^\star\)) The optimal value of a state is the maximum value across actions from that state:

\[ V_h^\star(s) = \max_{a \in \mathcal{A}} Q^\star_h(s, a). \tag{2.17}\]

The optimal value of an action is the immediate reward plus the expected value from the next state:

\[ Q^\star_h(s, a) = r(s, a) + \mathop{\mathbb{E}}_{s' \sim P(s, a)} [V^\star_{h+1}(s')]. \tag{2.18}\]

Proof. We first prove eq. 2.17. We begin by expanding the definition of the optimal value function using eq. 2.7:

\[ \begin{aligned} V_h^\star(s) &= \max_\pi V^\pi_h(s) \\ &= \max_\pi \mathop{\mathbb{E}}_{a \sim \pi_h(s)} [ Q^\pi_h(s, a) ]. \end{aligned} \tag{2.19}\]

Now note that \(Q^\pi_h\) doesn’t depend on \(\pi_h\), so we can split the maximization over \(\pi = (\pi_0, \dots, \pi_{H-1})\) into an outer optimization over the immediate action \(\pi_h\) and an inner optimization over the remaining actions \(\pi_{h+ 1}, \dots, \pi_{H-1}\):

\[ \max_\pi \mathop{\mathbb{E}}_{a \sim \pi_h(s)} \Big[ Q^\pi_h(s, a) ] = \max_{\pi_h} \mathop{\mathbb{E}}_{a \sim \pi_h(s)} \Big[ \max_{\pi_{h+1}, \dots, \pi_{H-1}} Q^\pi_h(s, a) \Big]. \tag{2.20}\]

But now the inner quantity is exactly \(Q^\star_h(s, a)\) as defined in eq. 2.16. Now note that maximizing over \(\pi_h\) reduces to just maximizing over the action taken:

\[ \max_{\pi_h} \mathop{\mathbb{E}}_{a \sim \pi_h(s)} [\cdots] = \max_{a \in \mathcal{A}} [\cdots]. \tag{2.21}\]

This proves eq. 2.17.

We now prove eq. 2.18. We begin by using eq. 2.8:

\[ \begin{aligned} Q^\star_h(s, a) &= \max_\pi Q^\pi_h(s, a) \\ &= \max_\pi \Big[ r(s, a) + \mathop{\mathbb{E}}_{s' \sim P(s, a)}[ V^\pi_{h+1}(s') ] \Big] \end{aligned} \]

We can move the maximization over \(\pi\) under the expectation since \(s, a\), and \(s'\) are all independent of \(\pi\). Substituting the definition of \(V^\star\) concludes the proof.

As in the Bellman consistency equations (Theorem 2.3), combining eq. 2.17 and eq. 2.18 gives the Bellman optimality equations.

Theorem 2.5 (Bellman optimality equations) The optimal value function function (def. 2.12) satisfies, for all timesteps \(h\in [H-1]\),

\[ V_h^\star(s) = \max_a r(s, a) + \mathop{\mathbb{E}}_{s' \sim P(s, a)} [V_{h+1}^\star(s')]. \tag{2.22}\]

Like the Bellman consistency equations (eq. 2.9), This is a system of \(H |\mathcal{S}|\) equations in \(H |\mathcal{S}|\) unknowns, so \(V^\star\) is the unique solution (assuming all the states are distinguishable from each other).

Note that the letter \(\pi\) doesn’t appear. This will prove useful when we discuss off-policy algorithms later in the course.

We now construct a deterministic history-independent optimal policy by acting greedily with respect to the optimal action-value function:

Definition 2.13 (Greedy policies) For any sequence of functions \(q_h : \mathcal{S} \times \mathcal{A} \to \mathbb{R}\) for \(h = 0, \dots, H-1\), we define the greedy policy \(\pi^q\) to be the deterministic policy that selects the action with the highest value according to \(q\) at each state:

\[ \pi_h^q(s) := \arg\max_{a \in \mathcal{A}} q_h(s, a). \tag{2.23}\]

Note that it is not true in general that \(Q^{\pi^q} = q\) for a greedy policy! For one, \(q\) might not even be a consistent Q function.

Code

def q_to_greedy(q: Float[Array, "S A"]) -> Float[Array, "S A"]:

"""

Get the (deterministic) greedy policy with respect to an action-value function.

Return the policy as a matrix of shape (S, A) where each row is a one-hot vector.

"""

A = q.shape[1]

a_ary = jnp.argmax(q, axis=1)

return jnp.eye(A)[a_ary]

def v_to_greedy(mdp: MDP, v: Float[Array, " S"]) -> Float[Array, "S A"]:

"""Get the (deterministic) greedy policy with respect to a value function."""

return q_to_greedy(v_to_q(mdp, v))Theorem 2.6 (A greedy optimal policy) The greedy policy \(\pi^{Q^\star}\), where \(Q^\star\) is the optimal action-value function (eq. 2.16), is an optimal policy (def. 2.11). Furthermore, \(\pi^{Q^\star}\) is optimal across all policies \(\pi^\text{any}\), including history-dependent ones, in the sense that for any partial trajectory

\[ \tau_h= (s_0, a_0, r_0, \dots, s_{h-1}, a_{h-1}, r_{h-1}, s_h), \]

it achieves a higher expected remaining reward:

\[ \mathop{\mathbb{E}}_{\tau \sim \rho^{\pi^{Q^\star}}} [ r_h+ \cdots + r_{H-1} \mid \tau_h ] \ge \mathop{\mathbb{E}}_{\tau \sim \rho^{\pi^\text{any}}} [ r_h+ \cdots + r_{H-1} \mid \tau_h ]. \tag{2.24}\]

By conditioning on \(\tau_h\), we mean to condition on the event that \(s_{h'}, a_{h'}\) are the state and action visited at time \(h' < h\), and \(s_h\) is the state at time \(h\). (If the reward function were stochastic, we would condition on the observed rewards as well.)

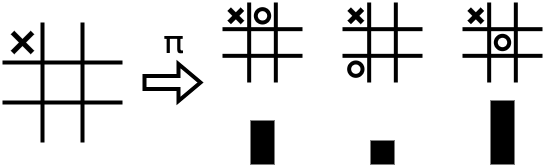

We will now prove by induction that \(\pi^{Q^\star}\) is optimal. Essentially, we begin at the end of the trajectory, where the optimal action is simply the one that yields highest immediate reward. Once the optimal values at time \(H-1\) are known, we use these to compute the optimal values at time \(H-2\) using the Bellman optimality equations (eq. 2.38), and proceed backward through the trajectory.

graph RL

Q0["$$Q^\star_{H-1} = r$$"] -- "$$\max_{a \in \mathcal{A}}$$" --> V0

Q0 -- "$$\arg\max_{a \in \mathcal{A}}$$" --> P0["$$\pi^\star_{H-1}$$"]

V0["$$V^\star_{H-1}$$"] -- "Bellman equations" --> Q1

Q1["$$Q^\star_{H-2}$$"] -- "$$\max_{a \in \mathcal{A}}$$" --> V1

Q1 -- "$$\arg\max_{a \in \mathcal{A}}$$" --> P1["$$\pi^\star_{H-2}$$"]

V1["$$V^\star_{H-2}$$"] -- "Bellman equations" --> Q2

Q2["..."]

Proof. At the end of the trajectory (time step \(H-1\)), we can’t take any more actions, so the \(Q\)-function simply returns the immediate reward:

\[ Q^\star_{H-1}(s, a) = r(s, a). \tag{2.25}\]

\(\pi^{Q^\star}\) is then optimal across all policies at time \(H-1\), since the policy only determines a single action, the one which maximizes (expected) remaining reward:

\[ \mathop{\mathbb{E}}_{\tau \sim \rho^{\pi^{Q^\star}}}[ r_{H-1} \mid s_{H- 1} = s ] = \max_{a \in \mathcal{A}} r(s, a). \tag{2.26}\]

For the inductive step, suppose \(\pi^{Q^\star}\) is optimal across all policies at time \(h+1\). Note that this implies \(V^{\pi^{Q^\star}} = V^\star\). Then for any other policy \(\pi^\text{any}\) (which could be history-dependent), and for any partial trajectory \(\tau_{h}\),

\[ \begin{aligned} &{} \mathop{\mathbb{E}}_{\tau \sim \rho^{\pi^\text{any}}} [ r_h+ \cdots + r_{H-1} \mid \tau_{h} ] \\ ={}& \mathop{\mathbb{E}}_{\substack{a \sim \pi^\text{any}_h(\tau_h) \\ s' \sim P(s_h, a)}} \left[ r_h+ \mathop{\mathbb{E}}_{\tau \sim \rho^{\pi^\text{any}}} [ r_{h+1} + \cdots + r_{H-1} \mid \tau_{h}, a_h= a, s_{h+1} = s' ] \right] \\ \le{}& \mathop{\mathbb{E}}_{\substack{a \sim \pi^\text{any}_h(\tau_h) \\ s' \sim P(s_h, a)}} \left[ r_h+ V^\star_{h+1}(s') \right] \\ \le{}& \max_{a \in \mathcal{A}} r(s_h, a) + \mathop{\mathbb{E}}_{s' \sim P(s_h, a)} \left[ V^\star_{h+1}(s') \right] \\ ={}& V^\star_h(s_h). \end{aligned} \tag{2.27}\]

This completes the inductive step, which shows that \(\pi^{Q^\star}\) is optimal across all policies at every time \(h\in [H]\).

Code

# TODO fix latexify bugs

def find_optimal_policy(mdp: MDP):

Q = np.zeros((mdp.H, mdp.S, mdp.A))

policy = np.zeros((mdp.H, mdp.S, mdp.A))

V = np.zeros((mdp.H+1, mdp.S)) # initialize to 0 at end of time horizon

for h in range(mdp.H - 1, -1, -1):

Q[h] = mdp.r + mdp.P @ V[h + 1]

policy[h] = jnp.eye(mdp.S)[jnp.argmax(Q[h], axis=1)] # one-hot

V[h] = jnp.max(Q[h], axis=1)

Q = jnp.stack(Q)

policy = jnp.stack(policy)

V = jnp.stack(V[:-1])

return policy, V, Q

latex(find_optimal_policy, id_to_latex={"policy": r"\pi"}, trim_prefixes={"mdp"})$

At each of the \(H\) timesteps, we must compute \(Q^{\star}_h\) for each of the \(|\mathcal{S}| |\mathcal{A}|\) state-action pairs. Each computation takes \(|\mathcal{S}|\) operations to evaluate the average value over \(s'\). Computing \(\pi^\star_h\) and \(V^\star_h\) then requires computing the value-maximizing action in each state, which is an additional \(O(|\mathcal{S}| \mathcal{A}|)\) comparisons. This is dominated by the earlier term, resulting in a total computation time of \(O(H \cdot |\mathcal{S}|^2 \cdot |\mathcal{A}|)\).

Note that this algorithm is identical to the policy evaluation algorithm (fig. 2.8), but instead of averaging over the actions chosen by a policy, we instead take a maximum over the action-values. We’ll see this relationship between policy evaluation and optimal policy computation show up again in the infinite-horizon setting.

Code

pi_opt, V_opt, Q_opt = find_optimal_policy(tidy_mdp)

assert jnp.allclose(pi_opt, tidy_policy_messy_only)

assert jnp.allclose(V_opt, V_messy)

assert jnp.allclose(Q_opt[:-1], v_ary_to_q_ary(tidy_mdp, V_messy)[1:])

"Assertions passed (the 'tidy when messy' policy is optimal)""Assertions passed (the 'tidy when messy' policy is optimal)"Let us review some equivalent definitions of an optimal policy:

Remark 2.5 (Equivalent definitions of an optimal policy). Let \(\pi\) be a stationary policy. Then the following are all equivalent:

- \(\pi\) is optimal across history-independent policies (def. 2.11).

- \(\pi\) is optimal across all policies (eq. 2.24).

- \(V^\pi = V^\star\).

- \(Q^\pi = Q^\star\).

- \(V^\pi\) satisfies the Bellman optimality equations (eq. 2.38).

1 and 3 are the same by definition. 3 and 4 are the same since \(V^\star\) and \(Q^\star\) uniquely define each other by eq. 2.17 and \(V^\pi\) and \(Q^\pi\) uniquely define each other by eq. 2.7 and eq. 2.8. 3 and 5 are the same by the Bellman optimality equations (Theorem 2.5). 1 and 2 are the same as shown in the proof of Theorem 2.6.

Remark 2.6 (Why stochastic policies). Given that there exists an optimal deterministic policy, why do we try ever try to learn stochastic policies? We will see a partial answer to this when we discuss the exploration-exploitation tradeoff in Chapter 4. So far, we’ve assumed that the environment is totally known. If it isn’t, however, we need some way to explore different possible actions, and stochastic policies provide a natural way to do this.

2.4 Infinite-horizon Markov decision processes

What if we allow trajectories to continue for an infinite amount of time, that is, set the time horizon \(H\) to infinity? This might seem impractical, since in the real world, most of the trajectories we care about terminate after some fixed number of steps. However, we will see that infinite-horizon MDPs are simpler in certain regards and can serve as good approximations to finite-horizon tasks. A crucial result is that Bellman operators (def. 2.10) in this setting are contraction mappings (Theorem 2.8), which yield simple fixed-point iteration algorithms for policy evaluation (Section 2.4.3.2) and computing the optimal value function (Section 2.4.4.1). Finally, we’ll present the policy iteration algorithm (Section 2.4.4.2). In addition to being an effective tool in its own right, we will find that policy iteration serves as a useful framework for designing and analyzing later algorithms.

2.4.1 Differences from the finite horizon setting

Remark 2.7 (Discounted rewards). First of all, note that the total reward \(r_0 + r_{1} + \cdots\) might blow up to infinity. To ensure that we work with bounded quantities, we insert a discount factor \(\gamma \in [0, 1)\) such that rewards become less valuable the further into the future they are:

\[ r_0 + \gamma r_{1} + \gamma^2 r_{2} + \cdots = \sum_{h=0}^\infty \gamma^hr_{h} \le R \frac{1}{1 - \gamma}, \tag{2.28}\]

where \(R\) is the maximum possible reward. We can think of \(\gamma\) as measuring how much we care about the future: if it’s close to \(0\), we only care about the near-term rewards; if it’s close to \(1\), we put more weight into future rewards.

You can also analyze \(\gamma\) as the probability of continuing the trajectory at each time step. (This is equivalent to \(H\) being distributed by a First Success distribution with success probability \(\gamma\).) This accords with the above interpretation: if \(\gamma\) is close to \(0\), the trajectory will likely be very short, while if \(\gamma\) is close to \(1\), the trajectory will likely continue for a long time.

The other components of the MDP remain from the finite-horizon setting (def. 2.4):

\[ \mathcal{M} = (\mathcal{S}, \mathcal{A}, P_0, P, r, \gamma). \]

Code

# Code-wise, we can reuse the `MDP` class from before @def-finite-horizon-mdp and set `mdp.H = float('inf')`.

tidy_mdp_inf = tidy_mdp._replace(H=float("inf"), gamma=0.95)Remark 2.8 (Stationary policies). Time-dependent policies become difficult to handle in the infinite-horizon case. Not only would they be computationally impossible to store, but also, many of the DP approaches we saw required us to start at the end of the trajectory, which is no longer possible. We’ll shift to stationary policies \(\pi : \mathcal{S} \to \mathcal{A}\) (deterministic) or \(\triangle(\mathcal{A})\) (stochastic), which don’t explicitly depend on the timestep.

Exercise 2.6 (Stationary policy examples) Which of the policies in ex. 2.4 (policies in the tidying MDP) are stationary?

Definition 2.14 (Value functions) We’ll also consider stationary value functions \(V^\pi : \mathcal{S} \to \mathbb{R}\) and \(Q^\pi : \mathcal{S} \times \mathcal{A} \to \mathbb{R}\). These now represent the expected total discounted reward:

\[ \begin{aligned} V^\pi(s) &= \mathop{\mathbb{E}}_{\tau \sim \rho^\pi} [r_0 + \gamma r_{1} + \gamma^2 r_{2} + \cdots \mid s_0 = s] \\ Q^\pi(s, a) &= \mathop{\mathbb{E}}_{\tau \sim \rho^\pi} [r_0 + \gamma r_{1} + \gamma^2 r_{2} + \cdots \mid s_0 = s, a_0 = a] \\ \end{aligned} \tag{2.29}\]

Exercise 2.7 (Time-independent) Note that we add up the rewards starting from time \(0\), whereas in the finite-horizon case, we needed to explicitly add the rewards from time \(h\) onwards. Heuristically speaking, why does it no longer matter which time step we start on when defining the value function? Refer back to the Markov property (def. 2.5).

Theorem 2.7 (Bellman consistency equations) The Bellman consistency equations play the same role they did in the finite-horizon setting (Theorem 2.3). We need to insert a factor of \(\gamma\) to account for the discounting, and the \(h\) subscript is gone since \(V^\pi\) is stationary:

\[ V^\pi(s) = \mathop{\mathbb{E}}_{\substack{a \sim \pi(s) \\ s' \sim P(s, a)}} [r(s, a) + \gamma V^\pi(s')]. \tag{2.30}\]

The value function and action-value function are still related in the same way:

\[ \begin{aligned} V^\pi(s) &= \mathop{\mathbb{E}}_{a \sim \pi(s)} [Q(s, a)] \\ Q^\pi(s, a) &= r(s, a) + \gamma \mathop{\mathbb{E}}_{s' \sim P(s, a)} [V^\pi(s')]. \end{aligned} \tag{2.31}\]

2.4.2 Contraction mappings

Recall that a policy’s Bellman operator takes a guess for the policy’s value function and returns an improved guess using one step of the policy (def. 2.10). In the infinite-horizon setting, this has the same form:

Definition 2.15 (Bellman operator) Let \(\pi : \mathcal{S} \to \triangle(\mathcal{A})\) be a stationary policy. Its Bellman operator is defined

\[ \mathcal{J}^{\pi}(v) := \Big( s \mapsto \mathop{\mathbb{E}}_{\substack{a \sim \pi(s) \\ s' \sim P(s, a)}} [r(s, a) + \gamma v(s')] \Big). \tag{2.32}\]

The crucial property of the Bellman operator is that it is a contraction mapping for any policy. Intuitively, if we start with two guesses \(v, u : \mathcal{S} \to \mathbb{R}\), if we repeatedly apply the Bellman operator to each of them, they will get closer and closer together at an exponential rate.

Definition 2.16 (Contraction mapping) Let \(X\) be a set with a norm \(\|\cdot\|\). We call an operator \(f: X \to X\) a contraction mapping if for any \(x, y \in X\),

\[ \|f(x) - f(y)\| \le \gamma \|x - y\| \]

for some fixed \(\gamma \in (0, 1)\).

Exercise 2.8 (Contraction mappings pull points together) Show that for a contraction mapping \(f\) with coefficient \(\gamma\), for all \(t \in \mathbb{N}\),

\[ \|f^{(t)}(x) - f^{(t)}(y)\| \le \gamma^t \|x - y\|, \tag{2.33}\]

i.e. any two points will be pushed closer by at least a factor of \(\gamma\) at each iteration.

It is a powerful fact, known as the Banach fixed-point theorem, that every contraction mapping has a unique fixed point \(x^\star\) such that \(f(x^\star) = x^\star\). This means that if we repeatedly apply \(f\) to any starting point, we will eventually converge to \(x^\star\):

\[ \|f^{(t)}(x) - x^\star\| \le \gamma^t \|x - x^\star\|. \tag{2.34}\]

Let’s return to the RL setting and apply this result to the Bellman operator. How can we measure the distance between two functions \(v, u : \mathcal{S} \to \mathbb{R}\)? We’ll take the supremum norm as our distance metric:

\[ \| v - u \|_{\infty} := \sup_{s \in \mathcal{S}} |v(s) - u(s)|, \]

i.e. we compare the functions on the state that causes the biggest gap between them. The Bellman consistency equations (Theorem 2.7) state that \(V^\pi\) is the fixed point of \(\mathcal{J}^\pi\). Then eq. 2.34 implies that if we repeatedly apply \(\mathcal{J}^\pi\) to any starting guess, we will eventually converge to \(V^\pi\):

\[ \|(\mathcal{J}^\pi)^{(t)}(v) - V^\pi \|_{\infty} \le \gamma^{t} \| v - V^\pi\|_{\infty}. \tag{2.35}\]

We’ll use this useful fact to prove the convergence of several algorithms later on.

Theorem 2.8 (The Bellman operator is a contraction mapping) Let \(\pi\) be a stationary policy. There exists \(\gamma \in (0, 1)\) such that for any two functions \(u, v : \mathcal{S} \to \mathbb{R}\),

\[ \|\mathcal{J}^{\pi} (v) - \mathcal{J}^{\pi} (u) \|_{\infty} \le \gamma \|v - u \|_{\infty}. \]

Proof. For all states \(s \in \mathcal{S}\),

\[ \begin{aligned} |[\mathcal{J}^{\pi} (v)](s) - [\mathcal{J}^{\pi} (u)](s)|&= \Big| \mathop{\mathbb{E}}_{a \sim \pi(s)} \left[ r(s, a) + \gamma \mathop{\mathbb{E}}_{s' \sim P(s, a)} v(s') \right] \\ &\qquad - \mathop{\mathbb{E}}_{a \sim \pi(s)} \left[r(s, a) + \gamma \mathop{\mathbb{E}}_{s' \sim P(s, a)} u(s') \right] \Big| \\ &= \gamma \left|\mathop{\mathbb{E}}_{s' \sim P(s, a)} [v(s') - u(s')] \right| \\ &\le \gamma \mathop{\mathbb{E}}_{s' \sim P(s, a)}|v(s') - u(s')| \qquad \text{(Jensen's inequality)} \\ &\le \gamma \max_{s'} |v(s') - u(s')| \\ &= \gamma \|v - u \|_{\infty}. \end{aligned} \]

2.4.3 Policy evaluation

The backwards DP technique we used in the finite-horizon case (Section 2.3.1) no longer works since there is no “final timestep” to start from. We’ll need another approach to policy evaluation.

The Bellman consistency equations (Theorem 2.7) yield a system of \(|\mathcal{S}|\) equations we can solve to evaluate a deterministic policy exactly. For a faster approximate solution, we can iterate the policy’s Bellman operator, since we know that it has a unique fixed point at the true value function.

2.4.3.1 Matrix inversion for deterministic policies

Note that when the policy \(\pi\) is deterministic, the actions can be determined from the states, and so we can chop off the action dimension for the rewards and state transitions:

\[ \begin{aligned} r^{\pi} &\in \mathbb{R}^{|\mathcal{S}|} & P^{\pi} &\in [0, 1]^{|\mathcal{S}| \times |\mathcal{S}|} & P_0 &\in [0, 1]^{|\mathcal{S}|} \\ \pi &\in \mathcal{A}^{|\mathcal{S}|} & V^\pi &\in \mathbb{R}^{|\mathcal{S}|} & Q^\pi &\in \mathbb{R}^{|\mathcal{S}| \times |\mathcal{A}|}. \end{aligned} \]

For \(P^\pi\), we’ll treat the rows as the states and the columns as the next states. Then \(P^\pi_{s, s'}\) is the probability of transitioning from state \(s\) to state \(s'\) under policy \(\pi\).

Example 2.8 (Tidying MDP) The tabular MDP from ex. 2.3 has \(|\mathcal{S}| = 2\) and \(|\mathcal{A}| = 2\). Let’s write down the quantities for the policy \(\pi\) that tidies if and only if the room is messy:

\[ r^{\pi} = \begin{bmatrix} 1 \\ 0 \end{bmatrix}, \quad P^{\pi} = \begin{bmatrix} 0.7 & 0.3 \\ 1 & 0 \end{bmatrix}, \quad P_0 = \begin{bmatrix} 1 \\ 0 \end{bmatrix} \]

We’ll see how to evaluate this policy in the next section.

The Bellman consistency equation for a deterministic policy can be written in tabular notation as

\[ V^\pi = r^\pi + \gamma P^\pi V^\pi. \]

(Unfortunately, this notation doesn’t simplify the expression for \(Q^\pi\).) This system of equations can be solved with a matrix inversion:

\[ V^\pi = (I - \gamma P^\pi)^{-1} r^\pi. \tag{2.36}\]

Exercise 2.9 (Matrix invertibility) Note we’ve assumed that \(I - \gamma P^\pi\) is invertible. Can you see why this is the case? (Recall that a linear operator, i.e. a square matrix, is invertible if and only if its null space is trivial; that is, it doesn’t map any nonzero vector to zero. Show that \(I - \gamma P^\pi\) maps any nonzero vector to another nonzero vector.)

Code

def eval_deterministic_infinite(

mdp: MDP, policy: Float[Array, "S A"]

) -> Float[Array, " S"]:

pi = jnp.argmax(policy, axis=1) # un-one-hot

P_pi = mdp.P[jnp.arange(mdp.S), pi]

r_pi = mdp.r[jnp.arange(mdp.S), pi]

return jnp.linalg.solve(jnp.eye(mdp.S) - mdp.gamma * P_pi, r_pi)Example 2.9 (Tidying policy evaluation) Let’s use the same policy \(\pi\) that tidies if and only if the room is messy. Setting \(\gamma = 0.95\), we must invert

\[ I - \gamma P^{\pi} = \begin{bmatrix} 1 - 0.95 \times 0.7 & - 0.95 \times 0.3 \\ - 0.95 \times 1 & 1 - 0.95 \times 0 \end{bmatrix} = \begin{bmatrix} 0.335 & -0.285 \\ -0.95 & 1 \end{bmatrix}. \]

The inverse to two decimal points is

\[ (I - \gamma P^{\pi})^{-1} = \begin{bmatrix} 15.56 & 4.44 \\ 14.79 & 5.21 \end{bmatrix}. \]

Thus the value function is

\[ V^{\pi} = (I - \gamma P^{\pi})^{-1} r^{\pi} = \begin{bmatrix} 15.56 & 4.44 \\ 14.79 & 5.21 \end{bmatrix} \begin{bmatrix} 1 \\ 0 \end{bmatrix} = \begin{bmatrix} 15.56 \\ 14.79 \end{bmatrix}. \]

Let’s sanity-check this result. Since rewards are at most \(1\), the maximum cumulative return of a trajectory is at most \(1/(1-\gamma) = 20\). We see that the value function is indeed slightly lower than this.

Code

eval_deterministic_infinite(tidy_mdp_inf, tidy_policy_messy_only[0])Array([15.56419, 14.78598], dtype=float32)2.4.3.2 Iterative policy evaluation

The matrix inversion above takes roughly \(O(|\mathcal{S}|^3)\) time. It also only works for deterministic policies. Can we trade off the requirement of finding the exact value function for a faster approximate algorithm that will also extend to stochastic policies?

Let’s use the Bellman operator to define an iterative algorithm for computing the value function. We’ll start with an initial guess \(v^{(0)}\) with elements in \([0, 1/(1-\gamma)]\) and then iterate the Bellman operator:

\[ v^{(t+1)} = \mathcal{J}^{\pi}(v^{(t)}), \]

i.e. \(v^{(t)} = (\mathcal{J}^{\pi})^{(t)} (v^{(0)})\). Note that each iteration takes \(O(|\mathcal{S}|^2)\) time for the matrix-vector multiplication.

Code

def supremum_norm(v):

return jnp.max(jnp.abs(v)) # same as jnp.linalg.norm(v, jnp.inf)

def loop_until_convergence(op, v, ε=1e-6):

"""Repeatedly apply op to v until convergence (in supremum norm)."""

while True:

v_new = op(v)

if supremum_norm(v_new - v) < ε:

return v_new

v = v_new

def iterative_evaluation(mdp: MDP, pi: Float[Array, "S A"], ε=1e-6) -> Float[Array, " S"]:

op = partial(bellman_operator, mdp, pi)

return loop_until_convergence(op, jnp.zeros(mdp.S), ε)Then, as we showed in eq. 2.35, by the Banach fixed-point theorem:

\[ \|v^{(t)} - V^\pi \|_{\infty} \le \gamma^{t} \| v^{(0)} - V^\pi\|_{\infty}. \]

Code

iterative_evaluation(tidy_mdp_inf, tidy_policy_messy_only[0])Array([15.564166, 14.785956], dtype=float32)Remark 2.9 (Convergence of iterative policy evaluation). How many iterations do we need for an \(\epsilon\)-accurate estimate? We can work backwards to solve for \(t\) (note that \(\log \gamma < 0\)):

\[ \begin{aligned} \gamma^t \|v^{(0)} - V^\pi\|_{\infty} &\le \epsilon \\ t &\ge \frac{\log (\epsilon / \|v^{(0)} - V^\pi\|_{\infty})}{\log \gamma} \\ &= \frac{\log (\|v^{(0)} - V^\pi\|_{\infty} / \epsilon)}{\log (1 / \gamma)}, \end{aligned} \]

and so the number of iterations required for an \(\epsilon\)-accurate estimate is

\[ T = O\left( \frac{1}{1-\gamma} \log\left(\frac{1}{\epsilon (1-\gamma)}\right) \right). \]

Note that we’ve applied the inequalities \(\|v^{(0)} - V^\pi\|_{\infty} \le 1/(1-\gamma)\) and \(\log (1/x) \ge 1-x\).

2.4.4 Optimality

Now let’s move on to solving for an optimal policy in the infinite-horizon case. As in def. 2.11, an optimal policy \(\pi^\star\) is one that does at least as well as any other policy in all situations. That is, for all policies \(\pi\), times \(h\in \mathbb{N}\), and initial trajectories \(\tau_{\le h} = (s_0, a_0, r_0, \dots, s_h)\),

\[ \begin{aligned} & \mathop{\mathbb{E}}_{\tau \sim \rho^{\pi^{\star}}}[ r_h+ \gamma r_{h+1} + \gamma^2 r_{h+2} + \cdots \mid \tau_{\le h}] \\ \ge{} & \mathop{\mathbb{E}}_{\tau \sim \rho^{\pi}}[ r_h+ \gamma r_{h+1} + \gamma^2 r_{h+2} + \cdots \mid \tau_{\le h}] \end{aligned} \tag{2.37}\]

Once again, all optimal policies share the same optimal value function \(V^\star\), and the greedy policy with respect to this value function is optimal.

Exercise 2.10 (The greedy optimal policy) Verify this by modifying the proof Theorem 2.6 from the finite-horizon case.

So how can we compute such an optimal policy? We can’t use the backwards DP approach from the finite-horizon case fig. 2.10 since there’s no “final timestep” to start from. Instead, we’ll exploit the fact that the Bellman consistency equation eq. 2.29 for the optimal value function doesn’t depend on any policy:

\[ V^\star(s) = \max_a \left[ r(s, a) + \gamma \mathop{\mathbb{E}}_{s' \sim P(s, a)} V^\star(s'). \right] \tag{2.38}\]

As before, thinking of the r.h.s. of eq. 2.38 as an operator on value functions gives the Bellman optimality operator

\[ [\mathcal{J}^{\star}(v)](s) = \max_a \left[ r(s, a) + \gamma \mathop{\mathbb{E}}_{s' \sim P(s, a)} v(s') \right] \tag{2.39}\]

Code

def bellman_optimality_operator(mdp: MDP, v: Float[Array, " S"]) -> Float[Array, " S"]:

return jnp.max(mdp.r + mdp.gamma * mdp.P @ v, axis=1)

def check_optimal(v: Float[Array, " S"], mdp: MDP):

return jnp.allclose(v, bellman_optimality_operator(v, mdp))2.4.4.1 Value iteration

Since the optimal policy is still a policy, our result that the Bellman operator is a contracting map still holds, and so we can repeatedly apply this operator to converge to the optimal value function! This algorithm is known as value iteration.

Code

def value_iteration(mdp: MDP, ε: float = 1e-6) -> Float[Array, " S"]:

"""Iterate the Bellman optimality operator until convergence."""

op = partial(bellman_optimality_operator, mdp)

return loop_until_convergence(op, jnp.zeros(mdp.S), ε)Code

value_iteration(tidy_mdp_inf)Array([15.564166, 14.785956], dtype=float32)Note that the runtime analysis for an \(\epsilon\)-optimal value function is exactly the same as Section 2.4.3.2! This is because value iteration is simply the special case of applying iterative policy evaluation to the optimal value function.

As the final step of the algorithm, to return an actual policy \(\hat \pi\), we can simply act greedily with respect to the final iteration \(v^{(T)}\) of our above algorithm:

\[ \hat \pi(s) = \arg\max_a \left[ r(s, a) + \gamma \mathop{\mathbb{E}}_{s' \sim P(s, a)} v^{(T)}(s') \right]. \tag{2.40}\]

We must be careful, though: the value function of this greedy policy, \(V^{\hat \pi}\), is not the same as \(v^{(T)}\), which need not even be a well-defined value function for some policy!

The bound on the policy’s quality is actually quite loose: if \(\|v^{(T)} - V^\star\|_{\infty} \le \epsilon\), then the greedy policy \(\hat \pi\) satisfies \(\|V^{\hat \pi} - V^\star\|_{\infty} \le \frac{2\gamma}{1-\gamma} \epsilon\), which might potentially be very large.

Theorem 2.9 (Greedy policy value worsening) \[ \|V^{\hat \pi} - V^\star \|_{\infty} \le \frac{2 \gamma}{1-\gamma} \|v - V^\star\|_{\infty} \]

where \(\hat \pi(s) = \arg\max_a q(s, a)\) is the greedy policy with respect to

\[ q(s, a) = r(s, a) + \mathop{\mathbb{E}}_{s' \sim P(s, a)} v(s'). \]

Proof. We first have

\[ \begin{aligned} V^{\star}(s) - V^{\hat \pi}(s) &= Q^{\star}(s,\pi^\star(s)) - Q^{\hat \pi}(s, \hat \pi(s))\\ &= [Q^{\star}(s,\pi^\star(s)) - Q^{\star}(s, \hat \pi(s))] + [Q^{\star}(s, \hat \pi(s)) - Q^{\hat \pi}(s, \hat \pi(s))]. \end{aligned} \]

Let us bound these two quantities separately.

For the first quantity, note that by the definition of \(\hat \pi\), we have

\[ q(s, \hat \pi(s)) \ge q(s,\pi^\star(s)). \]

Let’s add \(q(s, \hat \pi(s)) - q(s,\pi^\star(s)) \ge 0\) to the first term to get

\[ \begin{aligned} Q^{\star}(s,\pi^\star(s)) - Q^{\star}(s, \hat \pi(s)) &\le [Q^{\star}(s,\pi^\star(s))- q(s,\pi^\star(s))] + [q(s, \hat \pi(s)) - Q^{\star}(s, \hat \pi(s))] \\ &= \gamma \mathop{\mathbb{E}}_{s' \sim P(s, \pi^{\star}(s))} [ V^{\star}(s') - v(s') ] + \gamma \mathop{\mathbb{E}}_{s' \sim P(s, \hat \pi(s))} [ v(s') - V^{\star}(s') ] \\ &\le 2 \gamma \|v - V^{\star}\|_{\infty}. \end{aligned} \]

The second quantity is bounded by

\[ \begin{aligned} Q^{\star}(s, \hat \pi(s)) - Q^{\hat \pi}(s, \hat \pi(s)) &= \gamma \mathop{\mathbb{E}}_{s'\sim P(s, \hat \pi(s))}\left[ V^\star(s') - V^{\hat \pi}(s') \right] \\ & \leq \gamma \|V^{\star} - V^{\hat \pi}\|_\infty \end{aligned} \]

and thus

\[ \begin{aligned} \|V^\star - V^{\hat \pi}\|_\infty &\le 2 \gamma \|v - V^{\star}\|_{\infty} + \gamma \|V^{\star} - V^{\hat \pi}\|_\infty \\ \|V^\star - V^{\hat \pi}\|_\infty &\le \frac{2 \gamma \|v - V^{\star}\|_{\infty}}{1-\gamma}. \end{aligned} \]

So in order to compensate and achieve \(\|V^{\hat \pi} - V^{\star}\| \le \epsilon\), we must have

\[ \|v^{(T)} - V^\star\|_{\infty} \le \frac{1-\gamma}{2 \gamma} \epsilon. \]

This means, using Remark 2.9, we need to run value iteration for

\[ T = O\left( \frac{1}{1-\gamma} \log\left(\frac{\gamma}{\epsilon (1-\gamma)^2}\right) \right) \]

iterations to achieve an \(\epsilon\)-accurate estimate of the optimal value function.

2.4.4.2 Policy iteration

Can we mitigate this “greedy worsening”? What if instead of approximating the optimal value function and then acting greedily by it at the very end, we iteratively improve the policy and value function together? This is the idea behind policy iteration. In each step, we simply set the policy to act greedily with respect to its own value function.

Code

def policy_iteration(mdp: MDP, ε=1e-6) -> Float[Array, "S A"]:

"""Iteratively improve the policy and value function."""

def op(pi):

return v_to_greedy(mdp, eval_deterministic_infinite(mdp, pi))

pi_init = jnp.ones((mdp.S, mdp.A)) / mdp.A # uniform random policy

return loop_until_convergence(op, pi_init, ε)Code

policy_iteration(tidy_mdp_inf)Array([[1., 0.],

[0., 1.]], dtype=float32)Although PI appears more complex than VI, we’ll use Theorem 2.8 to show convergence. This will give us the same runtime bound as value iteration and iterative policy evaluation for an \(\epsilon\)-optimal value function (Remark 2.9), although in practice, PI often converges in fewer iterations. Why so? Intuitively, by iterating through actual policies, we can “skip over” different functions that represent the same policy, which value iteration might iterate through. For a concrete example, suppose we have an MDP with three states \(\mathcal{S} = \{\text A, \text B, \text C\}\). Compare the two functions below:

Code

display(Markdown(tabulate(

{

r"$s$": ["A", "B", "C"],

r"$a = 0$": [0.1, 0.8, 0.4],

r"$a = 1$": [0.2, 0.9, 0.5],

},

"keys"

)))

display(Markdown(tabulate(

{

r"$s$": ["A", "B", "C"],

r"$a = 0$": [0.1, 0.8, 0.4],

r"$a = 1$": [0.3, 1.0, 0.6],

},

"keys"

)))| \(s\) | \(a = 0\) | \(a = 1\) |

|---|---|---|

| A | 0.1 | 0.2 |

| B | 0.8 | 0.9 |

| C | 0.4 | 0.5 |

| \(s\) | \(a = 0\) | \(a = 1\) |

|---|---|---|

| A | 0.1 | 0.3 |

| B | 0.8 | 1 |

| C | 0.4 | 0.6 |

These both map to the same greedy policy, so policy iteration would never iterate through both of these functions, while value iteration might.

Theorem 2.10 (Policy Iteration runtime and convergence) The number of iterations required for an \(\epsilon\)-accurate estimate of the optimal value function is

\[ T = O\left( \frac{1}{1-\gamma} \log\left(\frac{1}{\epsilon (1-\gamma)}\right) \right). \]

Proof. This bound follows from the contraction property eq. 2.35:

\[ \|V^{\pi^{t+1}} - V^\star \|_{\infty} \le \gamma \|V^{\pi^{t}} - V^\star \|_{\infty}. \]

We’ll prove that the iterates of PI respect the contraction property by showing that the policies improve monotonically:

\[ V^{\pi^{t+1}}(s) \ge V^{\pi^{t}}(s). \]

Then we’ll use this to show \(V^{\pi^{t+1}}(s) \ge [\mathcal{J}^{\star}(V^{\pi^{t}})](s)\). Note that

\[ \begin{aligned} (s) &= \max_a \left[ r(s, a) + \gamma \mathop{\mathbb{E}}_{s' \sim P(s, a)} V^{\pi^{t}}(s') \right] \\ &= r(s, \pi^{t+1}(s)) + \gamma \mathop{\mathbb{E}}_{s' \sim P(s, \pi^{t+1}(s))} V^{\pi^{t}}(s') \end{aligned} \]

Since \([\mathcal{J}^{\star}(V^{\pi^{t}})](s) \ge V^{\pi^{t}}(s)\), we then have

\[ \begin{aligned} V^{\pi^{t+1}}(s) - V^{\pi^{t}}(s) &\ge V^{\pi^{t+1}}(s) - \mathcal{J}^{\star} (V^{\pi^{t}})(s) \\ &= \gamma \mathop{\mathbb{E}}_{s' \sim P(s, \pi^{t+1}(s))} \left[V^{\pi^{t+1}}(s') - V^{\pi^{t}}(s') \right]. \end{aligned} \tag{2.41}\]

But note that the expression being averaged is the same as the expression on the l.h.s. with \(s\) replaced by \(s'\). So we can apply the same inequality recursively to get

\[ \begin{aligned} V^{\pi^{t+1}}(s) - V^{\pi^{t}}(s) &\ge \gamma \mathop{\mathbb{E}}_{s' \sim P(s, \pi^{t+1}(s))} \left[V^{\pi^{t+1}}(s') - V^{\pi^{t}}(s') \right] \\ &\ge \gamma^2 \mathop{\mathbb{E}}_{\substack{s' \sim P(s, \pi^{t+1}(s)) \\ s'' \sim P(s', \pi^{t+1}(s'))}} \left[V^{\pi^{t+1}}(s'') - V^{\pi^{t}}(s'') \right]\\ &\ge \cdots \end{aligned} \]

which implies that \(V^{\pi^{t+1}}(s) \ge V^{\pi^{t}}(s)\) for all \(s\) (since the r.h.s. converges to zero). We can then plug this back into eq. 2.41 to get the desired result:

\[ \begin{aligned} V^{\pi^{t+1}}(s) - \mathcal{J}^{\star} (V^{\pi^{t}})(s) &= \gamma \mathop{\mathbb{E}}_{s' \sim P(s, \pi^{t+1}(s))} \left[V^{\pi^{t+1}}(s') - V^{\pi^{t}}(s') \right] \\ &\ge 0 \\ V^{\pi^{t+1}}(s) &\ge [\mathcal{J}^{\star}(V^{\pi^{t}})](s) \end{aligned} \]

This means we can now apply the Bellman convergence result eq. 2.35 to get

\[ \|V^{\pi^{t+1}} - V^\star \|_{\infty} \le \|\mathcal{J}^{\star} (V^{\pi^{t}}) - V^{\star}\|_{\infty} \le \gamma \|V^{\pi^{t}} - V^\star \|_{\infty}. \]

2.5 Key takeaways

Markov decision processes (MDPs) are a framework for sequential decision making under uncertainty. They consist of a state space \(\mathcal{S}\), an action space \(\mathcal{A}\), an initial state distribution \(P_0 \in \triangle(\mathcal{S})\), a transition function \(P(s' \mid s, a)\), and a reward function \(r(s, a)\). They can be finite-horizon (ends after \(H\) timesteps) or infinite-horizon (where rewards scale by \(\gamma \in (0, 1)\) at each timestep). Our goal is to find a policy \(\pi\) that maximizes expected total reward. Policies can be deterministic or stochastic, history-dependent or history-independent, stationary or time-dependent. A policy induces a distribution over trajectories.

We can evaluate a policy by computing its value function \(V^\pi(s)\), which is the expected total reward starting from state \(s\) and following policy \(\pi\). We can also compute the state-action value function \(Q^\pi(s, a)\), which is the expected total reward starting from state \(s\), taking action \(a\), and then following policy \(\pi\). In the finite-horizon setting, these also depend on the timestep \(h\).

The Bellman consistency equation is an equation that the value function must satisfy. It can be used to solve for the value functions exactly. Thinking of the r.h.s. of this equation as an operator on value functions gives the Bellman operator.

In the finite-horizon setting, we can compute the optimal policy using dynamic programming. In the infinite-horizon setting, we can compute the optimal policy using value iteration or policy iteration.

2.6 Bibliographic notes and further reading

The MDP framework can be traced to Puterman (1994). The proof of Theorem 2.6 can be found in Agarwal et al. (2022).

MDPs are the most common framework used throughout RL. See Section 1.4 for a list of popular textbooks on RL.

In most real-world problems, we don’t observe the entire state of the environment. Instead, we only get access to what our senses can perceive. This is what makes games like hide-and-seek enjoyable. We can model such environment as partially observed MDPs (POMDPs). Powell (2022) presents a unified framework for sequential decision problems.

Another important bandit algorithm is Gittins indices (Gittins, 2011). The derivation of this algorithm is out of scope of this course.